When we have multiple gpu nodes with multiple gpu cards, how can we get the gpu stats, such as gpu utilization, graphic memory usage, process list that use gpu currently, and display it in frontend. We can get the above gpu info by nvidia-smi command after installed the nvidia driver.

nvidia-smi Usage

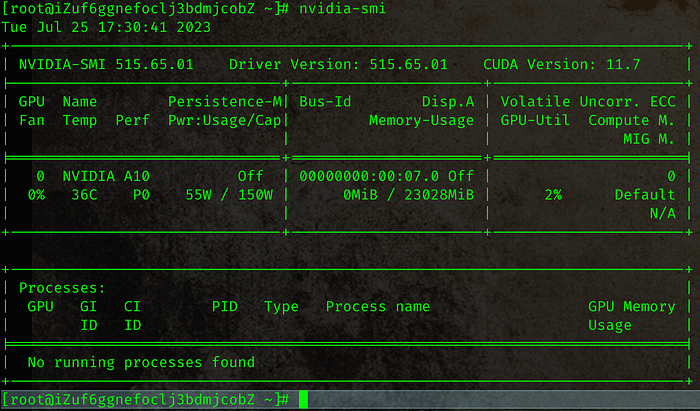

When we run nvidia-smi on the gpu node, we will get the detail info about gpu.

We should parse the output, get the info we wanted, formated gpu stats as json, and provide api to frontend.

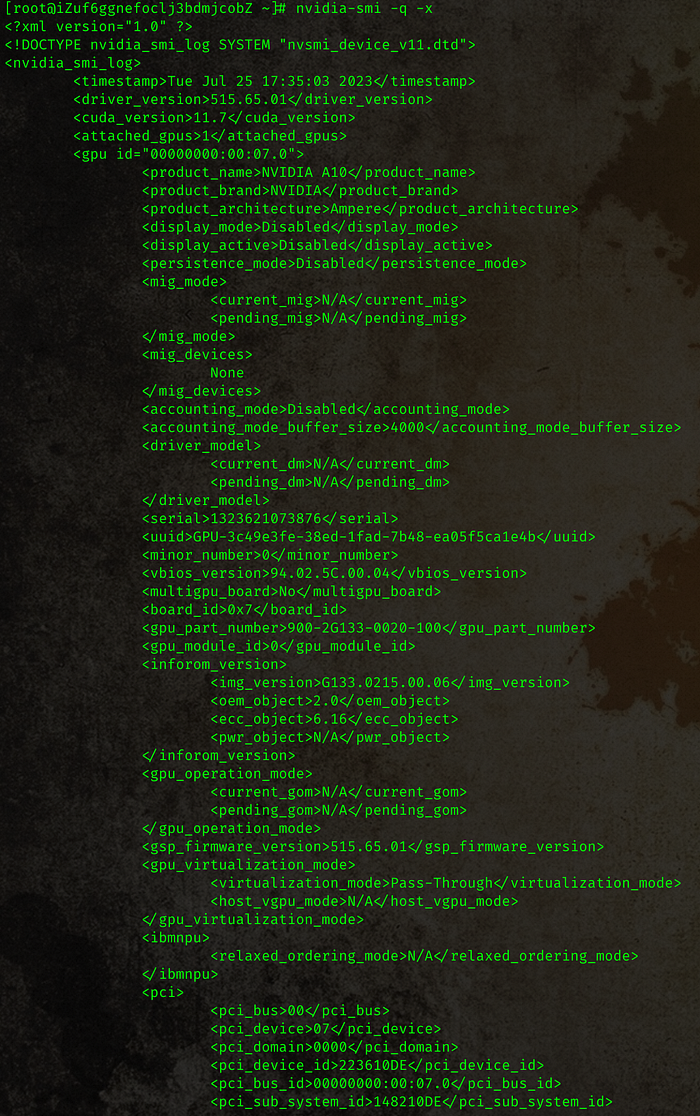

It’s difficult to parse the default output of nvidia-smi. We can using nvidia-smi -q -x that generate xml format output, and change it to json is much more easy than parse the default output.

Parse the xml to json

We can use the following nodejs codes to do it.

Define an execute shell command function, and get the stdout and stderr.

const { spawn } = require('child_process')

interface commandResult = { stdout: string, stderr: string }

const spawnProcessExecuteCommand = async (cmd: string, args: string[], timeout?: number): Promise<commandResult> => {

let resolve = (data: any) => {}

let reject = (err: any) => {}

const promise: Promise<commandResult> = new Promise((_resolve, _reject) => {

resolve = _resolve

reject = _reject

})

let stdout = Buffer.from('')

let stderr = Buffer.from('')

const subProcess = spawn(cmd, args)

subProcess.stdout.on('data', data => {

stdout = Buffer.concat([stdout, data], stdout.length + data.length)

})

subProcess.stderr.on('data', data => {

stderr = Buffer.concat([stderr, data], stderr.length + data.length)

})

subProcess.on('error', err => reject({ stdout: stdout.toString('utf8'), stderr: stderr.toString('utf8'), error: err }))

subProcess.on('exit', code => {

const stdoutStr = stdout.toString('utf8')

const stderrStr = stderr.toString('utf8')

const data = { stdout: stdoutStr, stderr: stderrStr }

if (code === 0) return resolve(data)

console.error(`execute ${cmd} with args = %o,exit code = ${code}, stdout = %s, stderr = %s`, args, stdoutStr, stderrStr)

reject({ ...data, error: { code } })

})

if (timeout) setTimeout(() => subProcess.kill('SIGKILL'), timeout)

return promise

}

Parse the xml stdout, and return json format.

const xml2js = require('xml2js')

const xmlOptions = {

explicitArray : false,

trim : true,

}

const xmlParser = new xml2js.Parser(xmlOptions)

interface IGpu {

id: number

uuid: string

driver: string

cuda: string

utilization: {

gpu: number

memory: {

total: number

reserved: number

used: number

}

encoder: number

decoder: number

}

processes?: {

pid: number

process_name: string

type: string

used_memory: number

}[]

temperature: {

current: number

}

}

const parseSingleGpuInfo = (gpu: any): Promise<IGpu> => {

const gpuUtilization = gpu.utilization

const memory = gpu.fb_memory_usage

let processes = gpu.processes

if (typeof processes === 'string') processes = { process_info: [] }

processes = processes.process_info || []

if (!Array.isArray(processes)) processes = [processes]

return {

id: gpu.minor_number,

uuid: gpu.uuid,

utilization: {

gpu: parseFloat(gpuUtilization.gpu_util),

memory: {

total: parseFloat(memory.total),

reserved: parseFloat(memory.reserved),

used: parseFloat(memory.used)

},

encoder: parseFloat(gpuUtilization.encoder_util),

decoder: parseFloat(gpuUtilization.decode_util)

},

processes: processes.map((process: any) => {

process.used_memory = parseFloat(process.used_memory)

process.pid = parseInt(process.pid)

return process

}),

temperature: {

current: parseFloat(gpu.temperature.gpu_temp),

}

}

}

const getGpuInfo = (): Promise<IGpu[]> => {

const command = ['nvidia-smi', '-q', '-x']

try {

const { stdout, stderr } = await spawnProcessExecuteCommand('nvidia-smi', ['-q', '-x'])

if (stderr) console.warn('get the stderr = %s', stderr)

if (!stdout) return []

const xmlLog = await xmlParser.parseStringPromise(stdout)

const nvidiaSmiLog = xmlLog.nvidia_smi_log

const driver = nvidiaSmiLog.driver_version

const cuda = nvidiaSmiLog.cuda_version

let gpuList = nvidiaSmiLog.gpu

// compatiable with single gpu with multiple gpu cards

if (!Array.isArray(gpuList)) gpuList = [gpuList]

return gpuList.map((gpu: any) => ({ ...parseSingleGpuInfo(gpu), driver, cuda }))

} catch (err) {

console.error('get gpu info or parse xml error = %o', err)

return []

}

}We can get the gpu stats in json format.

[

{

"id": 0,

"uuid": "GPU-f8f69f8d-80a3-05e0-5322-682cb9926226",

"driver": "515.65.01",

"cuda": "11.7",

"utilization": {

"gpu": 1,

"memory": {

"total": 23028,

"reserved": 296,

"used": 0

},

"encoder": 0,

"decoder": null

},

"processes": [],

"temperature": {

"current": 37

}

}

]Display in frontend

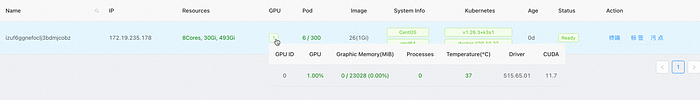

We use react in the frontend application. The gpu info are show as table.

import { Popover, Table } from 'antd'

import * as React from 'react'

const getColorFromNum = (percent: number) => {

let color = 'green'

if (percent >= 70) color = 'orange'

if (percent >= 80) color = 'red'

return color

}

const processColumns: any = [

{

align: 'center',

dataIndex: 'pid',

title: 'PID',

},

{

align: 'center',

dataIndex: 'type',

title: 'Type',

},

{

align: 'center',

dataIndex: 'process_name',

render: (name) => {

const processNamePath = name?.split(' ')?.[0]?.split('/')?.pop()

const ele = <span>{name}</span>

return <Popover content={ele}><span style={{ cursor: 'pointer' }}>{processNamePath}</span></Popover>

},

title: 'Process name',

},

{

align: 'center',

dataIndex: 'used_memory',

title: 'Memory(MiB)',

}

]

const NodeGPU = ({ gpu }: { gpu: IGpu }) => {

const columns: any = [

{

align: 'center',

dataIndex: 'id',

title: 'GPU ID',

},

{

align: 'center',

render: (_, gpuInfo) => {

if (!gpuInfo?.utilization) return <span style={{color:'red'}}>unknown</span>

const { gpu } = gpuInfo.utilization

const num = gpu.toFixed(2)

const color = getColorFromNum(num)

return <span style={{ color }}>{ `${num}%` }</span>

},

title: 'GPU',

},

{

align: 'center',

render: (_, gpuInfo) => {

if (!gpuInfo?.utilization || !gpuInfo?.utilization?.memory) return <span style={{ color:'red' }}>unknown</span>

const { memory: { total, used, reserved } } = gpuInfo?.utilization

const num = (100 * used / (total - reserved))

const color = getColorFromNum(num)

return <span style={{ color }}>{ `${used} / ${total} (${num.toFixed(2)}%)` }</span>

},

title: 'Graphic Memory(MiB)',

},

{

align: 'center',

dataIndex: 'processes',

render: (_, gpuInfo) => {

const processes = gpuInfo?.processes

let ele = <span>gpu is idle</span>

let txt = '0'

if (!processes) {

ele = <span>could get processes</span>

txt = 'N/A'

}

if (processes && !processes.length) {

ele = <span>gpu is idle</span>

txt = '0'

}

if (!processes || !processes?.length) return <Popover content={ele}><span style={{ cursor: 'pointer', color: txt === 'N/A' ? 'red' : 'green' }}>{txt}</span></Popover>

return <div>

<Table

columns={processColumns}

dataSource={processes}

pagination={false}

rowKey='pid'

/>

</div>

},

title: 'Processes',

},

{

align: 'center',

dataIndex: 'temperature',

render: (temperature) => {

if (!temperature) return <span style={{ color:'red' }}>unknown</span>

const color = getColorFromNum(temperature.current)

return <span style={{ color }}>{ temperature?.current ?? 'could not get temperature' }</span>

},

title: <span>Temperature(℃)</span>,

},

{

align: 'center',

dataIndex: 'driver',

title: 'Driver',

},

{

align: 'center',

dataIndex: 'cuda',

title: 'CUDA',

},

]

return (

<div>

<Table

columns={columns}

dataSource={gpu}

pagination={false}

rowKey='id'

/>

</div>

)

}

export default NodeGPU

Conclusion

We can display the gpu stats in our frontend app by the above steps. The gpu info can help user monitor the gpu quickly. If anyone have better ways to achieve it, please let me know.

Thanks for reading.